NPU Processors – Neural Processing Unit | 2024

Neuro-processors do not have to be large: there are also small chips that can be inserted into a smartphone. Most often these are tensor processors – those that are designed to work with the TensorFlow CCSLRF machine learning library, but not necessarily. They can also be coprocessors – additional modules that are built into the main chip to facilitate calculations.

Now almost every major manufacturer of chips for phones has its neural processors:

- Apple: Apple Bionic with Neural Engine coprocessor;

- Huawei: Kirin 970 with built-in NPU (Neural Network Processing Unit);

- Oppo: MariSilicon X;

- Samsung: Exynos 9 Series 9820.

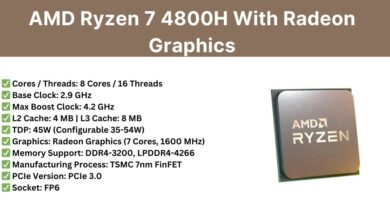

Performance Comparison:

- speed of a very powerful CPU (AMD Ryzen Threadripper 3990X) – 3 trillion operations per second;

- GPU – 20 trillion operations per second;

- NPU – 480 trillion operations per second.

What Are NPU Processors?

It’s a term that we come across more and more often in the world of smartphones. The NPU is a chip dedicated to neural networks. Its particularity is that it will provide a response not only based on a calculation but on a knowledge base that can be enriched over time to continuously improve its precision and speed. It will be used, for example, to perform image recognition and improve the quality of photos taken by the device.

However, NPUs are not limited to mobile terminals and can be used on a much larger scale for heavier applications. Intel recently unveiled its new Nervana chips, the NNP-L (Neural Network Processor for Learning), dedicated to learning, and the NNP-I (Neural Network Processor for Inference), dedicated to inference, and of which Facebook has accompanied the design. The social media giant is counting on AI to improve the functioning of its platform, particularly in terms of content moderation.

One of the features of this new design is the addition of a tile entirely dedicated to the calculations necessary for AI, called NPU. This tile appears independently in our PCs, like a processor in its own right.

But that’s not all; our PCs also have the possibility of choosing the calculator best suited to the required tasks among the NPU, the CPU, and the GPU. It depends on whether the task is intense (GPU) or complex (CPU). If the use is intended to continue over time, the NPU is preferred. Sometimes we will even have the option to manually choose which one we want to use.

Take a look at RTX 40 Series GPUs

NPU, Neural Networks On Your Device

The NPU, unlike the GPU, which works in parallel with the CPU, can perform functions similar to those of the CPU, but much more efficiently. Powered by artificial intelligence, an NPU can prioritize processes to run them exponentially faster and with much lower consumption. In smartphones, it is used in particular to improve the processing of photographs, although it participates in many other processes.

In addition, this architecture still has years of progress ahead of it, unlike the CPU and GPU, whose improvements are already lighter and based on increasing raw power. NPU technology, on the other hand, has been around for a shorter time and still has a lot of room for improvement to offer increasingly powerful performance.

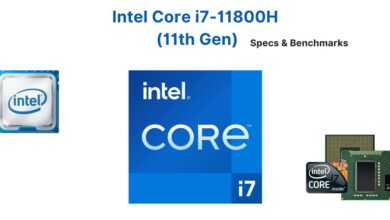

Intel Takes The Plunge With Its Core Ultra Chips

The era of “AI PCs” begins with Intel introducing its new Core Ultra notebook chips into the arena. Originally designed under the code name “Meteor Lake”, these processors are the first from Intel equipped with a neural processing unit (NPU) for the acceleration of AI tasks. The arrival of these new processors comes a week after AMD lifted the veil on its future Ryzen 8040 hardware, which also integrates NPUs.

Impressive Energy Efficiency, a step forward for Intel

Intel assures that its new Core Ultra processors consume up to “79% less standby energy on Windows than the previous generation of AMD’s Ryzen 7840U”. And that’s not all, they are also up to 11% faster than AMD hardware for multithreaded tasks.

Core Ultra processors are manufactured using the company’s new Intel 4 (7nm) process and feature its 3D FOVEROS package. This makes it, according to Intel, “the most efficient x86 processor for ultra-thin systems”.

A Step Forward in The World of Gaming

The Core Ultra chips also feature Intel Arc graphics, up to twice as fast as the previous generation, putting Intel in a more competitive position against AMD’s strong graphics hardware. Additionally, the Ultra 7 165H, for example, can run Baldur’s Gate 3 twice as fast as the Core i7 1370P at 1080p at medium graphics settings. It can also handle Resident Evil Village 95% faster than the latter.

But what advantages does NPU bring?

For starters, Optimization uses less battery, is up to 25x faster, and up to 50x more power efficient.

One of the most recent examples is the NPU used by the Huawei Mate 20 Pro. Which is capable of processing 200 photos in 5 seconds, whereas if you were to do the same process with a CPU it would take 120 seconds. That’s why in some cases we stop talking about “processing” and start talking about “artificial intelligence.”

Having Artificial Intelligence in an NPU will improve the performance of any terminal. It will reduce battery consumption, ensure greater security, and add more functions to the camera. In addition, it can learn from our habits and adjust all settings so that our device is always in optimal working condition.

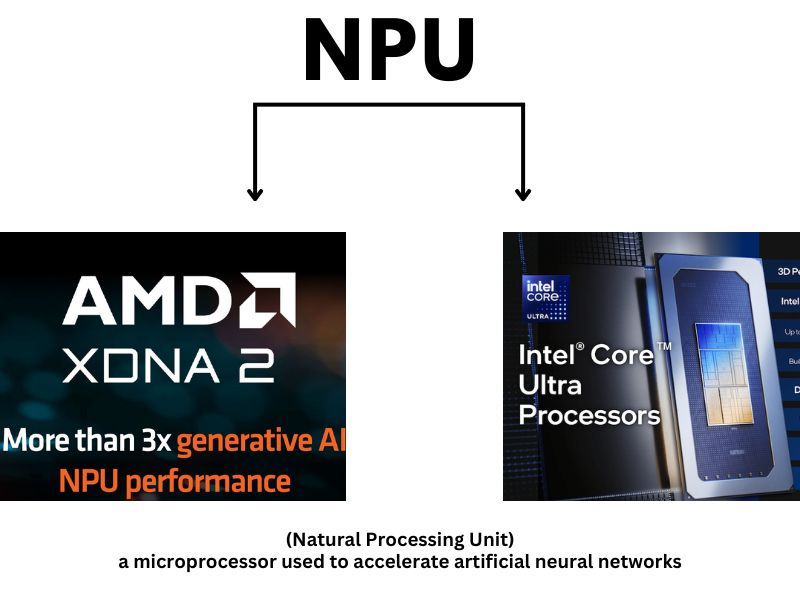

AMD Announces NPU XDNA 2 Architecture In 2024

The latter is important because it gives a first vision of what is “normally” planned in terms of AI acceleration. AMD is talking about Ryzen AI. Mobile processors are becoming more complex since they now consist of a classic CPU part. An onboard graphics solution (iGPU), and an NPU. NPU is the contraction of the Neural Processing Unit. It is a neural network accelerator.

Ryzen AI, XDNA 2

At AMD the architecture used to design this NPU is called XDNA. It is not new since it is present with the Ryzen 7040 series Phoenix offer. However, it benefits from optimizations with the Ryzen 8040 series “Hawk Point” to increase its calculation efficiency. On this point, AMD announces processing power increasing from 10 TOPS to 16 TOPS.

As is often the case in IT, this is only the beginning since AMD’s roadmap already mentions XDNA 2. This XDNA 2 NPU should multiply performance by more than 3 compared to the RTX series. The XDNA 2 architecture will be introduced with the Ryzen “Strix Point” generation expected at the end of 2024.

AMD does not yet offer details regarding its “Strix Point” mobile processors. It is said that they will benefit from the Zen 5 CPU architecture while offering more physical cores (up to 12) while the graphics part would benefit from an iGPU with RDNA 3.5 architecture. Some references could carry up to 32 CUs to compete with the iGPU equipping Apple’s M3 Max. In the end, if all this is confirmed, the identity of the Strix Point chips will be based on Zen 5, RDNA 3.5, and XDNA 2.

Source: Cowcotland ; Dell